29 Mar Garbage Sites Still Get Ranked… Why?

From time to time I’ll come across an excellent post on another site and republish it here. Why? Because YOU need to read it. And posting just a “link” doesn’t quite cut it when it comes to content requirements. This is one such post; from http://www.seomoz.org/. Read it, study it, understand it.

Enjoy!

You’ve built a fantastic site full of excellent, link-worthy content. You’re actively building relationships in the social space that send quality traffic to your site and establish your authority within your industry. You’ve focused on creating a great user experience and deliver value to your site’s visitors… and yet you’re still getting outranked by garbage websites that objectively don’t deserve to show up ahead of you.

In short, you’re following the advice that all the top SEO experts are giving out, but you simply can’t pull the same quantity of links that some of your less ethical competition is nabbing. Maybe we can learn a thing or two from that trash that’s pushing you down in the SERPs and start copying their links.

A Prime Example of Garbage in the SERPs

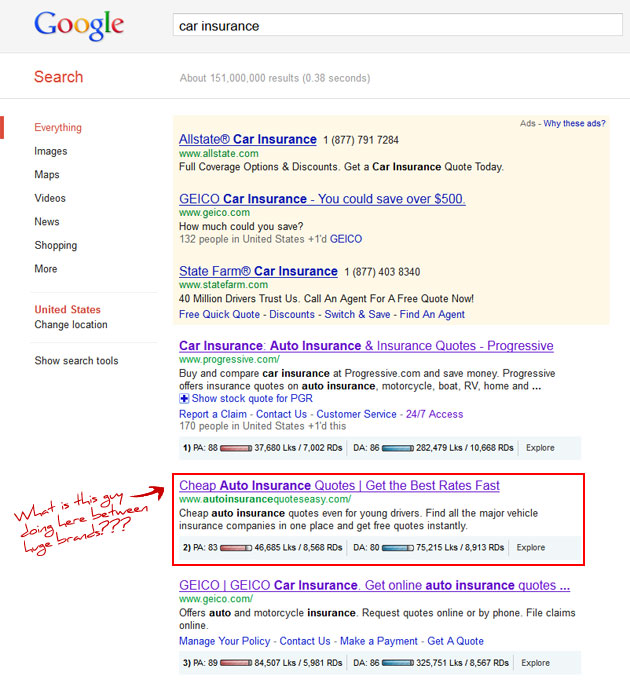

To determine how low value sites are able to rank for competitive terms, we’re going to dissect one of the most astonishing achievements in SERP manipulation I’ve seen in a long time — a situation where several billion dollar brands got stomped by a low quality site for some of the most competitive (and valuable) terms online. “Car Insurance” and “auto insurance” (and a host of related terms).

Take a look at these search results and I’m sure you can spot the outlier (hint… I put a box around it, wrote something next to it, and drew a big red arrow pointing to it):

You found it? Awesomesauce! There, sitting pretty at #2 for one of the Holy Grail search terms, right in between Progressive and Geico was… AutoInsuranceQuotesEasy.com? Not the most trustworthy looking domain name but to rank second for car insurance (and fourth for “auto insurance”) it must be an impressive site, right?

Surely it’s going to be stuffed with linkbait content like lists of the least and most expensive cars to insure, lists of the most expensive cities and states to insure a vehicle, calculators for determining the right amount of insurance to get, tips for lowering your insurance rate, and lists of the most frequently stolen or vandalized cars. It’ll be very attractive and super user friendly. Has to be, doesn’t it?

A Look at the Site

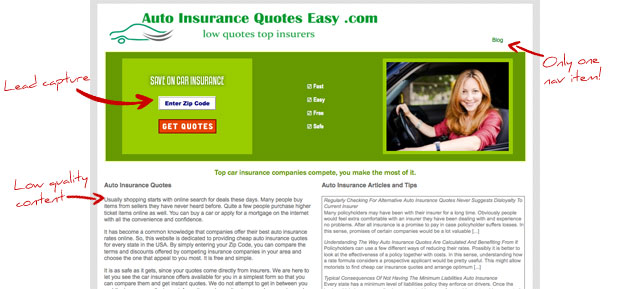

Far from it — it’s a large lead capture form at the top of the page (powered by Sure Hits), some low quality text content below it, and a single navigation item (leading to the site’s blog). Nothing else.

The site’s blog doesn’t prove to be a whole lot better, as it’s jam packed with poorly written content, most of which exists only to create a reasonable amount of space in between repetitions of keyword phrases.

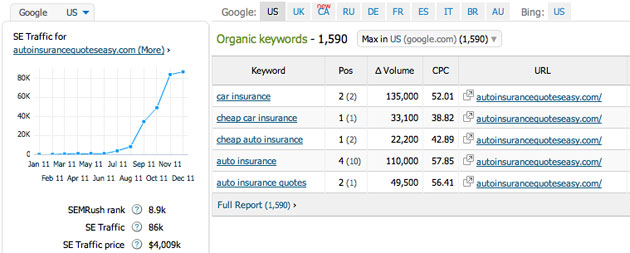

When I find a site like this ranking in a competitive niche, my first thought is always that the search result I’m seeing might be an anomaly. I double-checked with my buddy Ian Howells (@ianhowells – smart dude) and he was seeing the same thing. Then I looked it up at SEMrush.com, where I saw this:

Looks Like Google Forgot to Take Out the Trash

This was no fluke. In under a year this site had gone from a newly registered domain (December 2010 registration date) to the top of the mountain of search, ranking for some of the most competitive terms online. SEM Rush estimated the paid search equivalent value of the traffic received by this domain to be over $4 million… per month!

That’s a lot of scratch generated by a site that’s not employing a single one of the methods most leading SEO experts currently preach… so what gives?

Well, to find out, let’s head on over to Open Site Explorer and Majestic SEO to take a closer look at how a simple lead capture site was able to build up enough authority to outrank the world’s most famous insurance-touting lizard… and what we can learn from it to help our sites climb the search rankings.

From Majestic SEO, I was able to pull a backlinks discovery chart that shows approximate numbers for the amount of new links added per month. It’s pretty clear that these guys weren’t messing around with a conservative link velocity. They really got after it, adding a considerable amount of new links early in the site’s life cycle and became even more aggressive starting in October. This second push correlates pretty nicely with the spike in organic search traffic shown above from SEMrush.

So we know that this site grabbed top rankings for some super competitive keywords and held the position for several months. We also know it employed an aggressive link building campaign. It’s time to dive deeper into what these links looked like and where they were placed to see if we can replicate them.

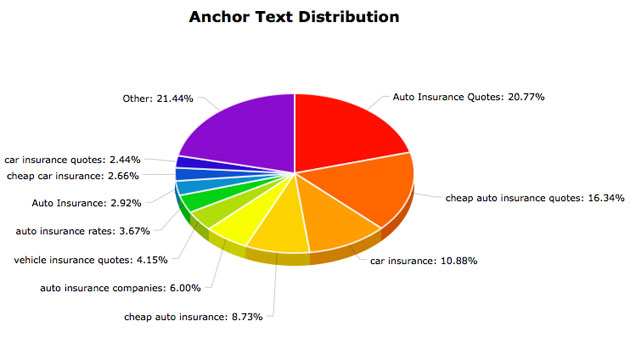

After running an export of AutoInsuranceQuotesEasy.com’s link profile from OSE, I started to analyze their link profile, focusing initially on anchor text distribution. Looking at the chart below, you’ll notice that the site is very heavily weighted towards targeted anchor text. Their top 10 most frequently occurring anchor texts made up nearly 80% of all links.

Within the subset of links that contain targeted anchor text, there’s a fair amount of variety, though the vast majority of the links contain some modified form of “auto insurance” and “car insurance.” All interesting information, but before looking at this chart, we all probably knew that the site was going to be ranking based on heavy usage of anchor text. That isn’t super actionable data — if we were looking to compete in this space, we’d already plan on trying to get lots of exact and partial match links.

But what if there was an easy way to burn through this list of links and spot the ones that would be super easy to copy? What if, without having to manually load a single page, we could identify all of the blog comments, blogroll links, author bios, footer links, resource boxes, link lists, and private blog network posts? Wouldn’t that be helpful? Even for the ultra-white hats, using this approach could eliminate these cheap links and make it more efficient to identify legitimate editorial links that you might try to match.

Taking Link Analysis a Step Further — Using Semantic Markup to Identify Link Types

The good news is that we can quickly sift through a mountain of backlinks and reliably segment them into groups. Thanks to the adoption of semantic markup over the past few years, most websites happily give this information away.

“What’s semantic markup?” some of you ask. It’s code that inherently has meaning. Code that describes its own purpose to the browser (or crawler). In a perfect world, that means elements like <header>, <article>, and <footer>. Those are all available in HTML 5. But since the advent of id and class names in markup, developers have been trying to add meaning to what would otherwise be ambiguous code. Most of the web is now built to look like this:

<div class=”comment”>My comment goes here</div>

or this:

<div id=”footer”>Copyright info, etc.</div>

Sadly, it doesn’t quite look like this:

<a href=”my-spammy-website” class=”spam-links”>My spammy anchor text</a>

But there’s enough meaning built in to most id and class names that we can start to discern quite quickly what most elements mean. Knowing that this is a pretty standard convention, it’s not too hard to build a crawler that will analyze this data for us, looping through each line of our OSE export. For each entry it will (this is going to get a little nerdy, so bear with me):

- Fetch the url of the page that contains the link to the target site

- Convert that info into a Document Object Model (DOM) object

- Run an xPath query to find the link on the page with a matching href value

- Reverse traverse the DOM, looking for containing elements that contain a class or id value that matches a link-type pattern

In non-geek speak, that means that we check to see if our link sits inside of a container that has a recognizable id or class name.

Classifying the Links

If, during that reverse traversal, it finds a match, we can effectively label that link. So if our link appears inside a div with the id of “footer” we can label that as a footer link. If it’s in a div or paragraph (or any other element) with the class of “comment-37268,” we can still call it a match and note that it’s a comment link.

We can add a second level of information on comment links by searching the DOM for all external links on the page and counting the total. The higher the number, the greater the likelihood that the site is auto-approving comments.

If we fail to retrieve the page or we get the page but the link is no longer present, we can label the link as dead.

We can also do some simple domain matching on known article directories, web directories, and web 2.0 properties, though for this example I only used a few domains for each of these groupings. With larger lists, the “unknown” link types would likely shrink.

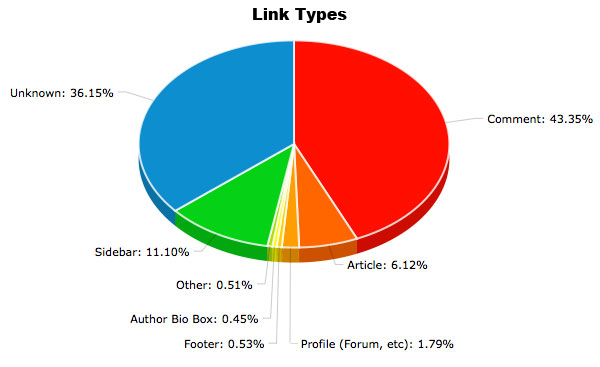

With the crawler built and running, I was able to pull the following data for this site (dead links removed):

The chart above starts to paint a much clearer picture of how these rankings were built — blog commenting, article marketing, and sidebar links played a big role in boosting this site’s link profile.

What do those links have in common? They are very unlikely to be legitimate editorial links. Instead, it looks like the rankings for this site were built on “link dropping,” the process of leveraging control of an independent site to leave your own link without oversight or review.

Since we’ve been able to automatically identify roughly 65% of the live links to the site, we’ve got a smaller unknown group to work with now. as a result, we can pull some of those remaining unidentified links for manual review. And that’s where we see gems like this:

Sifting through a sampling of these unclassified links, we see a bunch of web sites like this, which to a machine look like contextual links in the main content area. Obviously, this doesn’t stand up to human review and is a completely indefensible link building strategy.

We also spot a bunch of links on sites with posts covering a wide range of topics. They’re publishing new content pretty frequently and every post has links in it with targeted anchor text (to highly profitable niches).

These types of posts come from one of the most effective link dropping strategies working today — the use of private blog networks. So let’s start to look into how we can more accurately classify these links by network so we can start posting to them as well.

The Next Step: Domain Matching to Identify Private Blog Networks

Effective private blog networks are built to have no footprint. They look like completely independent sites, don’t interlink, and have no shared code. When done correctly, they won’t share an IP address, Google AdSense publisher ID, or Google Analytics account. In short, they can’t be spotted by analyzing the on-page content.

A truly private blog network (one owned entirely by a company that uses it to get their own rankings), is almost impossible to identify. Blog networks that are open to paid membership (like Build My Rank, Authority Link Network, Linkvana, and High PR Society) are easier to spot — though not without cost.

The way we can pick out the domains in these networks is to create content with a shared unique phrase (or link to a decoy domain). Once this content is published and indexed, we can scrape Google for listings containing the target phrase. Once we have the list of URLs where our content has been published, we can cull out the domain names and add them to a match list.

With links classified into groups, we can now export lists that all have the same tactical approach to duplication. That makes it super easy to outsource this stuff. Send the list of blog comments to someone (or a team of people) and have them start matching links. If you’re particularly daring, you can completely automate this using Scrapebox — it all depends on your personal ethics and risk tolerance.

Same thing with web directories — these are links that can be acquired with brute force, so commit lower cost resources into acquiring them.

Sidebar, footer, and blogroll links are most likely paid links, part of a private blog network (hopefully we’ve matched the domains and reclassified those), or possibly valid resource lists. Contacting webmasters to find out what it takes to get a link in those areas requires a little more finesse, but with a little guidance, junior team members can handle these tasks.

If they match our private blog network list, we can submit posts through on those networks.

A Word of Caution

Be careful who you copy. The site I referenced in this article has already been dropped by Google (I wouldn’t have published this if they were still ranking). I suspect it was a manual action, since the site had a pretty stable three months at the top (even in the age of Panda). Google just can’t allow low quality sites to outrank billion dollar brands for high visibility terms, and I believe they took corrective action to create a better user experience for the search terms this site ranked for.

It’s possible that they didn’t just implement this on a domain level, but instead stripped the sites that linked to AutoInsuranceQuotesEasy.com of their ability pass juice. That would mean that if you were duplicating this site’s links, you’d be out of business too. Always weigh the risk against the reward and NEVER gamble with a client’s site without getting approval (in writing) from them that they are comfortable with the risk of losing all of their rankings.

Want to Analyze Links for Yourself? I’ve Got You Covered

Obviously, since I have the data for the site used in this case study, I’ve actually built the tool talked about in the “Using Semantic Markup…” section of this post. For my purposes, it didn’t need a fancy design or multiple user management, and I ALMOST published this post without bothering to put that stuff together. Thankfully, some of my friends in the SEO community (Ethan Lyon, Mike King, Dan Shure, Nick Eubanks, Ian Howells) pushed for me to make this application usable by other people, so…

You can start analyzing links using Link Detective for your own projects today.

If you want to know when new features get rolled in to the application, just follow me at @eppievojt on Twitter. I’ll also make note of it on my (infrequently updated) personal website, eppie.net.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don’t have time to hunt down but want to read!